## Defining AI Agents

The narrative around AI agents is getting louder. We moved from talking about LLMs to agentic AI.

One of my pet peeves is to be clear about the [[Working with words in a startup|words]]^[I spent a lot of time in my startup writing definitions and being a strict editor of nomenclature used to define job. I was so particular about the word quality that one of my team members created a [meme](https://buttondown-attachments.s3.us-west-2.amazonaws.com/images/ce2145aa-34e1-444a-9174-9987f636f2c3.jpeg) out of it] we use to define things. [Simon Wilison](https://simonwillison.net/2025/Sep/18/agents/) also falls in the same bracket of people.

> Jargon terms are only useful if you can be confident that the people you are talking to share the same definition! If they don’t then communication becomes _less_ effective—you can waste time passionately discussing entirely different concepts.

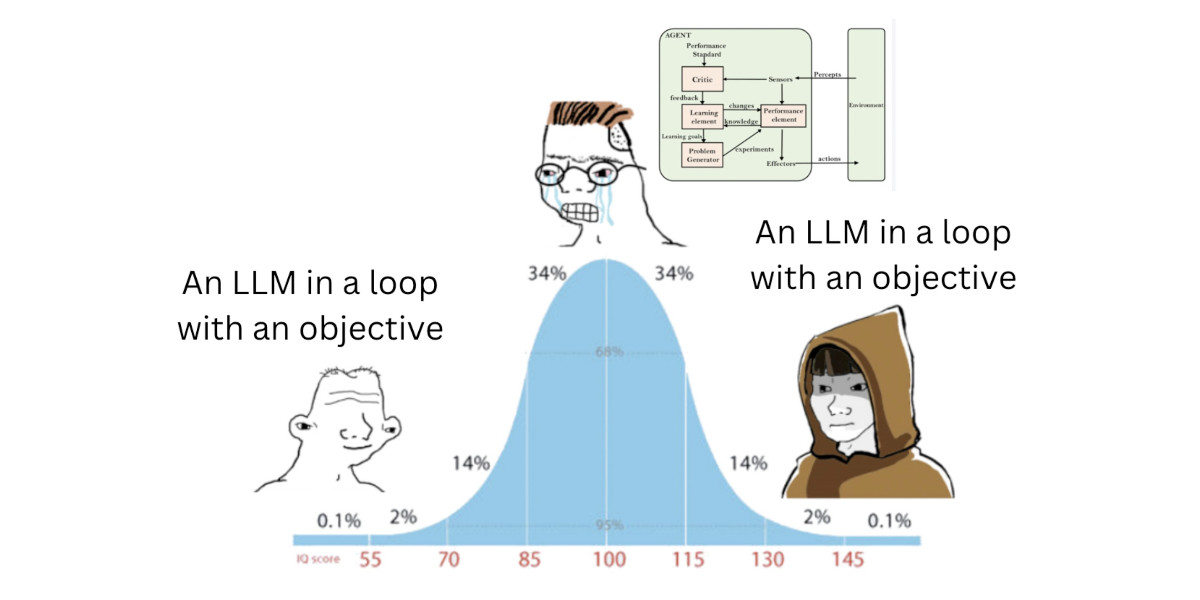

In his [article](https://simonwillison.net/2025/Sep/18/agents/) on defining Agents in AI. He refers to a meme [tweet](https://twitter.com/josh_bickett/status/1725556267014595032) of Josh Bickett ;

> What is an AI agent?

>

So, Simon suggest the broad enough consensus has been achieved in the AI engineering space.

> An LLM agent _runs tools in a loop to achieve a goal_. Let’s break that down.

>

> The “tools in a loop” definition has been popular for a while—Anthropic in particular have [settled on that one](https://simonwillison.net/2025/May/22/tools-in-a-loop/). This is the pattern baked into many LLM APIs as tools or function calls—the LLM is given the ability to request actions to be executed by its harness, and the outcome of those tools is fed back into the model so it can continue to reason through and solve the given problem.

>

> “To achieve a goal” reflects that these are not infinite loops—there is a stopping condition.

>

> I debated whether to specify “... a goal set by a user”. I decided that’s not a necessary part of this definition: we already have sub-agent patterns where another LLM sets the goal (see [Claude Code](https://simonwillison.net/2025/Jun/2/claude-trace/) and [Claude Research](https://simonwillison.net/2025/Jun/14/multi-agent-research-system/)).

AI agents are a sequence of steps executing in loops towards a specific goal. I would like to focus on the goal and why it becomes the gateway to deploying AI agents.

## Enhancing productivity is the objective

Multiple reports have started surfacing about productivity gains due to AI. Similarly public companies in the domain of logistics have come out and mentioned double digit productivity increases in their earnings report^[Companies like CH Robinson, RXO and other large public companies have highlighted their productivity gains in Q3 2025 earnings reports].

Logistics belongs to [[Legacy domains|Legacy]] domain.

> 🚨 Legacy domains are characterised by no systematic incentives for front-like workforce to change their status quo.

This would entail push back from current labour in the industry. Trying to push the AI agents without factoring the necessary change management would lead to catastrophic outcomes.

When talking about what would be the use of [[What is one key use case where LLMs can be effectively applied to benefit Indian transporter?|LLMs in Indian Transporter scenario]] , I wrote the following :

> LLMs enable creative solutioning with incredible compute power to recieve a step change increase in productivity (througput of executing jobs).

I further shared how it would play out by citing an example of booking a truck.

> ![[Transporter_Workflows.png]]

> ……

> With LLMs we build an agent that help a transporter execute all 4 worflows without changing the way they work. It replicates the mode of input(talk or rather shout at the agent) but then structure prompt and executes all 4 workflows simulataneously to get the task of booking a truck a fraction of time.

>

> - Inform the shipper of the price band for transportation.

> - Check availability of truck from within their network and negotiate a price with truck owner.

> - Reference history of lane to prioritise who to call.

> - Execute this multiple times till a match

I wrote about how the AI agent would execute the mentioned loop to book a truck for the transporter. But I didn’t mention why the transporter should let the AI agent execute this workflow for him/her.

We can ascertain that letting AI agent perform this workflow would increase the productivity of the transporter but the change management to let the AI agent work on transporters behalf will fall flat if we can’t position the reason behind it.

## Optimising workflows through a service framework

We begin with using the old adage around service. You can only pick any 2 out of 3 services we offer.

![[IMG_1793.jpeg]]

The implication of optimising the service provided on these three dimensions leads to not a sustainable service.

![[IMG_1794.jpeg]]

When the foundation models and agentic AI narratives started surfacing, I believed we could put them to use and sustain the service levels across all 3 dimensions. And this will done autonomously without any human in the loop.

But as I spend more time with using different models and their current capabilities, I was wrong in my initial hypothesis.

I work in an organisation that views AI agents as labor being embedded in the product. (Emphasis mine)

> The first major theme Jonah shared was about **embedding labor into the product**. He gave several examples. In Transporeon’s Marketplace, AI now verifies member information and credentials at scale (more than 50,000 members per quarter), eliminating manual checks. When shippers upload contracts or rate tables, AI automatically reads and maps them into Transporeon’s rate management and procurement systems. Even something as routine as calling to schedule a dock appointment can now be handled by an AI agent. In short, **Transporeon is taking the manual work required to operate software and building it into the software itself**.

>

> [Takeaways from Transporeon Summit 2025](https://talkinglogistics.com/2025/11/04/takeaways-from-transporeon-summit-2025/)

Circling back to my Indian transporter example. An AI agent with the goal to book a truck will run through the steps of the workflows in loop till it succeeds.

Since its a probabilistic outcome, the transporter will be vary of the output since the truck booked may not be the right one. It may be expensive then he/she would have booked or the truck selected is a bad choice(poor quality).

This leads to perverse incentive of distrust and restricting AI agent from becoming a colleague.

In this scenario if we allow the transporter, similar to how a manager plans with his/her team, double check with the transporter on the price band and shortlisted trucks before making the calls and booking a truck.

This enables Transporter to focus on Good and Cheap parts of the service he/she is providing to their customer while AI agent is busy executing it fast.

By building and positioning the AI agents in this manner we would not only increase productivity but also improve service levels of the transporter in much more cost effective way than a human labour.

## Simple, Complicated and complex

>

>

> The simple and complicated workflows are dependant solely on internal factors. People, business logic and systems developed from a single component(activity) within an organisation.

>

> Complex workflows are dependent** on another factor, domain (overarching business) within which these workflows exist.

>

> [[Understandings Systems at work]]

The real skill for technology companies in legacy domains will be mapping the workflow and deconstructing it into simple components.

Followed by framing the workflow within the service framework and then building AI agent that is embedded as a labour to achieve 1 specific goal, either cheap, good or fast, by running autonomously.

***

*All opinions in this post are solely mine and not of my current or any previous employer.*

***

`tags`: #logistics #PoV #transportation #ai

Originally Published on : `09-11-25`

`Status`: #Brewing